Know Your Ears – Neural Signal Processing

In the final stage of our little journey through the human auditory system, we get to a couple of fascinating implementations of neural signal processing algorithms.

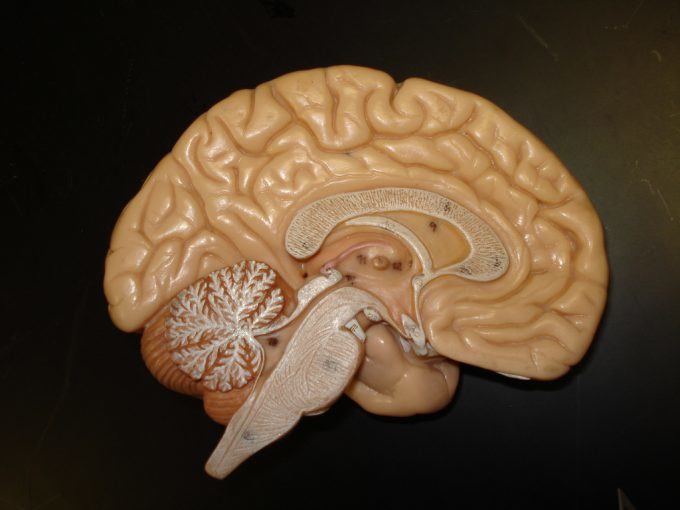

I’m constantly amazed and fascinated by the capabilities of the human body and especially the brain. It’s an incredibly efficient and effective machine for processing any type of information. But what’s even more amazing is what we already know today about the complex mechanisms and processes that create our perception of reality.

The following is a very brief overview on some of the principles of sound processing we have scientific evidence for. The details are infinitely complex. Thus, I want to focus on some basic principles that significantly shaped my viewpoint on sound and music.

The processing discussed in the following happens in different places along the way from the inner ear over the brain stem to the auditory cortex. To shorten things and maintain a healthy focus I’ll be able to leave out the details of anatomy this time. Fortunately, it’s not necessary for the understanding of the processes to know where exactly the nucleus lateralis lemnisci is located. The same goes for the exact evidence that lead to the conclusion that these mechanisms likely exist (usually a mixture of psychoacoustic research, neurobiology and ethically disputable experiments with barn owls).

What is Neural Signal Processing?

The key function of neural signal processing in this context is to create a large set of different representations from the sensory signals that the ears produce. In many ways, this is similar to digital signal processing algorithms, and many advanced algorithms for speech enhancement, speech recognition, audio compression etc. are based on these findings.

There are three main functions implemented by neural signal processing:

- Enhancement of relevant information (noise reduction, dereverberation)

- Extraction of hidden information (spatial information, pitch, rhythm, speech features)

- Separation of different sources (or “objects”)

This way, a vast amount of “decoded” information is created that serves as input to the enormous pattern recognition and learning machines that our brains consist of. You can imagine it as a large screen filled with all kinds of analyzers, spectrograms and advanced analysis plots.

Tonotopic Representations

As we learned last week, a decomposition of sound into different frequency components happens already in the cochlea. This decomposition is preserved through many of the known stages of neural signal processing. That means, sound with similar frequency content leads to neural activity in nearby areas, even in the higher stages of the auditory cortex.

The same goes for other features like interaural differences or modulation frequencies, as we’ll see below. That means we can literally find “pictures” that represent certain “measurements” in several areas of the brain, for example ones that are very similar to what you might know as a spectrogram.

Binaural Processing

So what kinds of measurements are actually processed neurally, and how does it work? A very important class are time and level differences between the signals from both ears, which are really useful for figuring out which sounds come from what direction.

Neurobiological research suggests that the analysis of interaural time and level differences is implemented using some very simple neurons as building blocks. These basic neurons are very similar to logic gates in digital electronics, or basic mathematic operations like addition or multiplication. Instead of binary states or numbers, these neurons “compute” using mostly the frequency of occurrence of electrical impulses.

To illustrate, here’s an example for a simple network model that can compute a kind of cross-correlation to assess interaural time differences. The leftmost outputs will show more activity if the right ear signal is delayed with respect to the left ear signal.

For this example, we only need neurons that forward an arriving activity with a slight delay and neurons that only fire if the two “inputs” are excited simultaneously.

In a similar but slightly more complicated way, a representation of level differences can also be created. Using such simple building blocks, almost any signal processing algorithm can be implemented. For example a filter bank…

Modulation Spectral Analysis

Another important dimension that is very likely analyzed through neural signal processing is the frequency of amplitude modulations. Psychoacoustic research suggests that audio signals are further decomposed into different modulation frequency components using a filter bank configuration similar to that of the audio frequency decomposition implemented in the cochlea (in terms of frequency resolution).

What is that useful for? Analyzing the envelope modulation frequencies especially for the higher audio frequency bands above 1 kHz is very helpful with separating different sounds by their pitch. But explaining that in detail would obviously lead too far for now.

Also, changes in sound contain a lot of information, in speech as in music. So the analysis of modulation plays a key role in speech and music processing. For example, speech intelligibility can be enhanced by increasing the amplitude modulations in a speech recording.

These modulation filter banks are sensitive for modulation frequencies of up to around 1 kHz, especially at higher audio frequencies. There’s a whole world of interesting phenomena and ideas revolving around modulation spectral analysis, but that’ll surely exceed the scope of this post. For now keep in mind that we are very sensitive to amplitude modulation, a fact that could use some more awareness in the audio community.

Conclusions

And another lightning fast journey comes to an end. So what are the important things that you should remember?

Before any sound information reaches the more conscious stages of our perception, it is massively preprocessed, decoded and analyzed. The results have nothing to do at all with something like a waveform. What matters most is the frequency content and some more advanced properties hidden in a signal like modulation frequencies and interaural differences.

It is this preprocessed information that feeds the higher level pattern matching mechanisms, which help us understand speech, recognize instruments, analyze rhythm and harmony and much more.

Photo credit: biologycorner via Foter.com / CC BY-NC