Auditory Masking: Knowing What You Don’t Hear

After the technical topics of the recent weeks, it’s time to talk about human hearing again. Let’s dip our toes into auditory masking!

High Resolution Audio – The State of the Debate

A recently published meta-study confirms that listeners are able to discriminate high resolution audio recordings from standard ones, at least to some degree. It pays to take a closer look.

Understanding Time-Frequency Segmentation

Humans are great at identifying, localizing and separating different sound sources in a complex mixture, like music. The key to sound source separation is time-frequency segmentation. Sounds great, huh? Let’s shed some light on these buzzwords.

Sound Localization Basics

Creating an illusion of space is a key element of music and sound design. But to be convincing, we need to understand the mechanisms of spatial hearing. The most important component of that is sound localization.

The Why and How of Blind Testing

Let’s face it: listening isn’t objective at all. Especially when it comes to the subtle details. The influence of preconceptions and current state of mind can be huge. And if we can’t back our impressions by measurements, the last resort to get an objective view of sonic reality is blind testing. Here’s a short introduction to the basic issues and techniques.

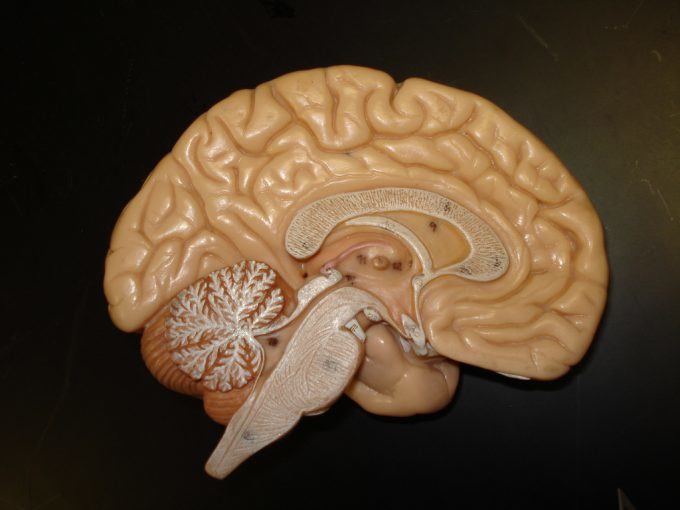

Know Your Ears – Neural Signal Processing

In the final stage of our little journey through the human auditory system, we get to a couple of fascinating implementations of neural signal processing algorithms.

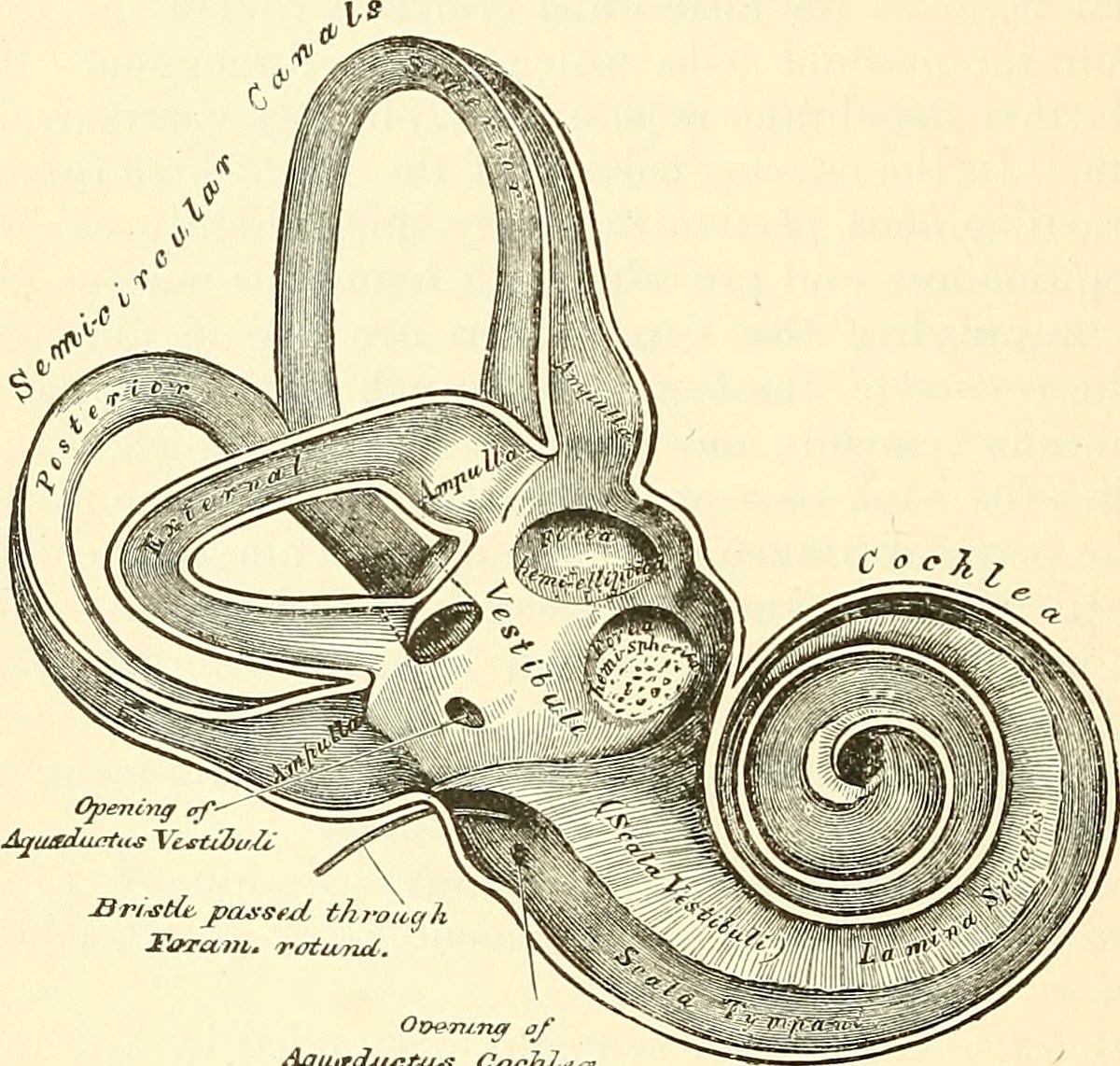

Know Your Ears – The Inner Ear

After having followed the path of sound vibration through the outer ear, ear canal and middle ear in the first part of this series, it is time to go on by looking at how vibration is transformed into nerve impulses in the inner ear.

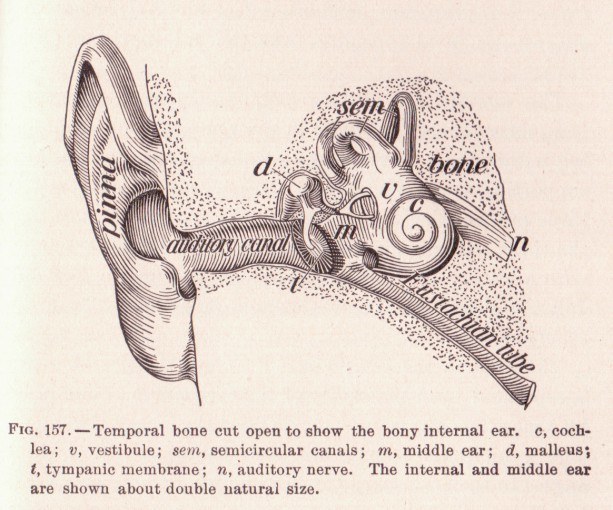

Know Your Ears – The Outer And Middle Ear

Sound travels a long way until we finally consciously perceive it. Even after arriving at our ears, it is converted, transformed and decoded multiple times. This is the first part of a series that walks us through the long journey from vibrating air to our consciousness.

Hearing: The Blessings and Curses

For working with audio, we have two tools at hand to assess what is going on: hearing and measurement. I already posted an article about the blessings and curses of audio measurement, to increase awareness about what we can and cannot achieve with it, and about the several traps installed along the way. And necessarily there must be a complementary post that deals with the even more important tool we use: our hearing. Continue reading

About JENS: Sound Localization In The Median Plane

In a lot of ways, music mixing is all about creating a spatial impression and placing every instrument in its specific position in a virtual space. Besides the usual suspects like panning and interchannel delays for positioning in the horizontal plane and reverberation and equalization techniques to create a sense of distance, there is a third dimension of sound localization that is often overlooked: the median plane, which means localization in the front, back, above and below directions. Let’s have a closer look at the human abilities to distinguish these directions and at my neat FREE plug-in you can use to fool the ears a bit more when mixing.